Vishay Honors Mouser With Top Awards for Distribution Excellence

DALLAS & FORT WORTH, Texas--(BUSINESS WIRE)--Jun 14, 2023--

2023-06-14 21:29

PewDiePie: From making amateur videos to dominating YouTube, 3 untold secrets about popular Internet icon

PewDiePie, a self-proclaimed introvert, turned to video games for comfort as a way to cope with the demands of daily life

2023-06-04 14:58

What’s Trending Today: Delta Air’s Plane Slide Deployed by Accident, Tucker Carlson, Reddit Blackout

Welcome to Social Buzz, a daily column looking at what’s trending on social media platforms. I’m Caitlin Fichtel,

2023-06-12 21:20

Knightscope Announces Automated Gunshot Detection

MOUNTAIN VIEW, Calif.--(BUSINESS WIRE)--Jul 14, 2023--

2023-07-14 21:58

What does Montana's TikTok ban actually mean?

Well, would you look at that: Montana banned TikTok. Why? "To protect Montanans’ personal and

2023-05-19 00:50

Save 54% on a lifetime of language lessons from Rosetta Stone

TL;DR: As of July 21, get lifetime access to all Rosetta Stone Languages for only

2023-07-21 17:50

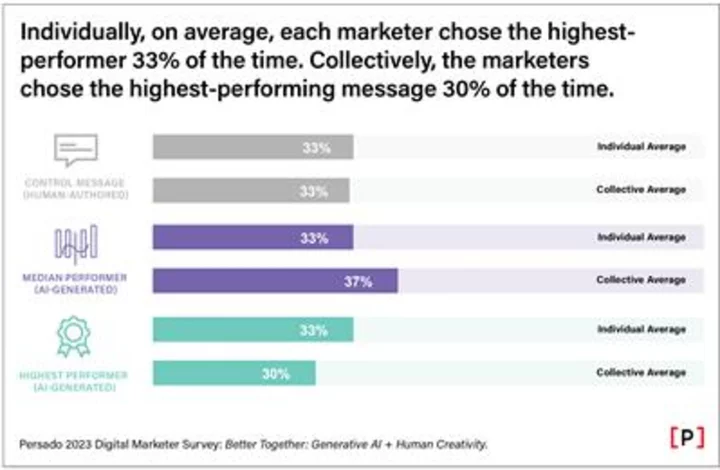

New Persado Study Quantifies Revenue Lift from Pairing Marketing Teams with Generative AI

NEW YORK--(BUSINESS WIRE)--May 11, 2023--

2023-05-11 21:52

Get your very own refurbished Echo Dot for 26% off

Save $14: As of July 17, the Certified Refurbished Echo Dot (5th Gen) is on

2023-07-17 23:50

US Defense Tech CEOs Urge Speedier Procurement to Counter China

Almost 20 defense technology executives plan meetings Monday with Biden administration officials, House Speaker Kevin McCarthy and other

2023-09-15 22:56

Explainer-Reddit protest: Why are thousands of subreddits going dark?

Thousands of popular Reddit communities dedicated to topics ranging from Apple Inc to gaming and music locked out

2023-06-13 01:51

What are semiconductors and how are they used?

Governments around the world are scrambling to boost semiconductor production.

2023-08-04 00:51

Token Loyalty Card DMCC Proudly Presents Cleopatra, the World's First Multi-Branded Web3 Loyalty Club

DUBAI, United Arab Emirates--(BUSINESS WIRE)--Jul 5, 2023--

2023-07-06 00:29

You Might Like...

MacBook Air 15-inch: Apple reveals big version of its smallest laptop

TikTok seeks up to $20 billion in e-commerce business this year - Bloomberg News

ChatGPT creators try to use artificial intelligence to explain itself – and come across major problems

A woman was found trapped under a driverless car. It's not what it looks like, the car company said

Who is Hennessy? Kai Cenat expresses desire to meet Cardi B's sister during livestream with rapper Offset

Yazaki-Torres Relies on Boomi To Modernize Business Systems

Garuda Tests First Commercial Flight On Biofuel to Cut Emissions

Prep for CompTIA certifications with this $50 training course bundle