Apple headset: Everything we expect from VR goggles as Apple prepares for release date

Apple is about to launch its first new platform in almost a decade, and what could be its most decisive product since the iPhone. A new mixed reality headset is due to be launched during its Worldwide Developers Conference event on Monday, though it might not arrive then. When it is revealed, it could decide the future of Apple, its users and the devices that everyone uses. Here’s everything we know about the headset, ahead of Monday’s likely big reveal. Price One thing has been discussed at length about the headset: how much exactly it will cost. And most of that discussion has agreed that the answer will be a lot. Rumours have pointed at $3,000 for the first version of the headset, which would make it very expensive even among the otherwise expensive Apple products. That high price is likely to be a result of the high-end components that are required to power the headset, which is rumoured to have significantly higher performance than its competitors. Apple is said to be expecting far fewer people to buy the headset than would buy its other products. As such, the high price might not translate to high revenues, and Apple might not be expecting it to. There is some chance, however, that all of those rumours are wrong; when the iPad was released, everyone though it would cost $999, and Steve Jobs took great joy in delighting in having proven the “pundits” wrong when he unveiled it at $499. It’s helpful for Apple if people think that it will cost more and they can then reveal that it’s actually just quite expensive, rather than very expensive. Release date Monday is almost certain to be the day the headset is revealed. But it would be very surprising indeed if it went on sale any time after that. Recent rumours have suggested that it will not actually be on shelves – or however it is sold – until much later in the year. It’s likely that Apple will want it ready for the important holiday period, which would mean getting it on sale in October or November. Apple, under Tim Cook, has developed a much-envied knack for announcing products and having them ready to go soon after, even when they are sold in such vast quantities as the iPhone. But it has still left big gaps between the announcement and the introduction of a product in the past, especially when they represent a whole new platform. The Apple Watch was announced during the September release event for the iPhone 6, but it did not actually arrive until the end of the following April. The move to Apple Silicon for Macs was announced in June 2020, at WWDC, but the first computers using it did not actually arrive until the following November. Those delays meant that Apple could be prepared, ensuring that it could have enough of the hardware made in time and not have to worry about the design or other details leaking. But waiting also meant that developers could be prepared, too, and ensure that their apps and other software were ready for the new platform. Spec Apple is said to be very focused on ensuring that the headset brings a high-quality experience. That means a much more luxurious specification than existing headsets at this kind of price point. That includes very detailed and powerful displays. Reports have suggested that it could offer up to 5,000 nits of brightness – enough for HDR – and a total 8K resolution from two Micro OLED displays that should allow for rich detail and fast response times. It is set to be powered by equally high-level hardware. It will have two chips that ensure it is able to work on its own without a companion device, reports have indicated, and provide stable and quick output. Design Leaked designs have suggested that the headset will look something like the AirPods Max combined with an Apple Watch: the same unapologetic aluminium used on both of those devices, and the soft material that it is combined with to make them actually wearable. That would certainly make sense, since Apple has always been focused on ensuring that its products do not just look nice on their own but sit well together, too. And it will no doubt have learned plenty from its earlier work in wearable devices, including headphones and watches. But little has leaked about what the headset will actually look like. Apple could opt to go for some other look entirely. Sensors VR headsets need sensors both to know where they are and to know what their user is doing. The headset is expected to include a strong array of them. It will use 3D sensors to know where users hands are, as well as any other objects. They are likely to be similar to the LiDAR tools that are in the iPhone and iPad, and can already be used for mapping rooms, for instance. It wil also have tools to detect more about the person wearing it, too. Reports suggest that it will be able to see people’s facial expressions, as well as including microphones for voice control through Siri. It is also expected to include more standard cameras, that will allow users to see the real world and overlay virtual objects on it. That will be controlled using a dial similar to the one on the Apple Watch and AirPods, which can be used to add more or less of the real world, reports have indicated. The headset is also likely to be able to connect to the iPhone for some uses, such as text input. And it will probably be able to use other earphones, such as the AirPods – Apple has already been working hard on “spatial audio” features for them, which would slot in nicely with virtual reality. Software Apple is said to be working on a new AR/VR operating system for the headset, with rumours suggesting that it could be called xrOS or realityOS. But there has been little reported about how exactly that software will work. It will probably be based on Apple’s other platforms – which all have a fairly consistent look – tailored so that users can see it in 3D space. Apple has been rumoured to be working on augmented reality versions of its own apps, such as FaceTime. And part of the reason for launching at WWDC is probably so that it can help developers start working on their apps, too. But one of the key unanswered questions is how the will all work, both individually and within that broader operating system. Problems? No major platform of this kind could be without problems. But numerous reports have suggested that the new headset could have a few more than most. Throughout its development, some both within and outside Apple have argued that the product is either not a good fit or is not ready yet in its current form. At the same time, others have pressed on, arguing that it is better to get some version of the headset out into the world and develop it from there. Even in recent days, as the release of the headset nears, reports have suggested issues. Bloomberg’s Mark Gurman reported that even in current testing the headset appears to be getting hot, for instance. Another headset? The mixed reality goggles, due to be released at WWDC, are thought to be just one part of Apple’s big plan for augmented and virtual reality. Apple is also said to be working on a separate headset that could be released at a later date, and for a lower price. Eventually, too, Apple might want to take some of the technology from the goggles and integrate them into glasses that allow people to see the world normally but with virtual objects imposed on top. Rumours have long suggested that is the eventual aim – but it might never come. Read More Apple is about to update all its products – and release a very big new one Mark Zuckerberg reveals new VR headset ahead of Apple Apple gives update on the App Store ahead of expected headset reveal Major leak reveals details of Apple’s VR headset days before unveiling Apple is going to reveal something else alongside its headset, rumours suggest Trust and ethics considerations ‘have come too late’ on AI technology

2023-06-03 02:52

Coinbase CEO says complying with SEC request would have been the 'end of the crypto industry in the US'

The Securities and Exchange Commission asked Coinbase to halt trading on all cryptocurrencies except for bitcoin before it sued the company in June, Coinbase's chief executive told the Financial Times.

2023-08-01 02:22

This is the reason why self-service checkouts are fitted with mirrors

With the increasing number of self-service checkout machines popping up in stores for convenience, there is one simple feature that is used to put off potential shoplifters - mirrors. There's a good chance that you've looked at your reflection in the screens fitted to these machines, and the purpose of it is for potential shoplifters to catch themselves in the mirror in the hopes of making them feel guilty. This pang of a guilty conscience is hoped to prevent them from committing any crime (it's not just there for vanity purposes like most of us use it for). Research also backs up the theory that people who see themselves in a mirror are less likely to do something bad. A 1976 study from Letters of Evolutionary Behavioural Science found that when people are around mirrors, they "behave in accordance with social desirability". "Mirrors influence impulsivity, a feature that is closely related to decision-making in both social and non-social situations." When participants in the experiment were looking at mirrors, their "private self-awareness was activated" by them and as a result influenced "decision-making as a non-social cues". Similarly, Psychology Today notes how a mirror allows "people to literally watch over themselves" and this "dramatically boosts our self-awareness". Meanwhile, the issue of self-service checkouts and shoplifting was highlighted in a report by Mashed last year which it appeared to confirm that Walmart's attempt at combatting this problem was a psychological method with the addition of mirrors (though Walmart, alongside other supermarkets, has never confirmed the purpose of their mirrors at their self-service checkout services). Sign up to our free Indy100 weekly newsletter Have your say in our news democracy. Click the upvote icon at the top of the page to help raise this article through the indy100 rankings.

2023-10-09 18:19

AI Drug Discovery Firm Recursion Surges Following $50 Million Nvidia Investment

The latest sign of investors’ unrelenting demand for all things artificial intelligence related: a record rally in drug

2023-07-13 02:51

Apple Catalyst Sought After Drab Headset Reaction

The stock market’s cool response to the launch of Apple Inc.’s mixed-reality headset left investors pondering what will

2023-06-06 19:17

Snowflake Concludes its Largest Data, Apps, and AI Event with New Innovations that Bring Generative AI to Customers’ Data and Enable Organizations to Build Apps at Scale

No-Headquarters/BOZEMAN, Mont.--(BUSINESS WIRE)--Jun 30, 2023--

2023-06-30 21:23

Forza Motorsport Game Pass Release Date

Forza Motorsport will be coming to Xbox Game Pass. Find out when in this article.

2023-10-07 05:19

Smartphones Are Stuck in a Rut. AI Could Be the Cure.

Apple's latest iPhones and Google's new Pixels all have the same issue: a lack of real innovation. Here's how AI could make phones exciting again.

2023-10-07 20:17

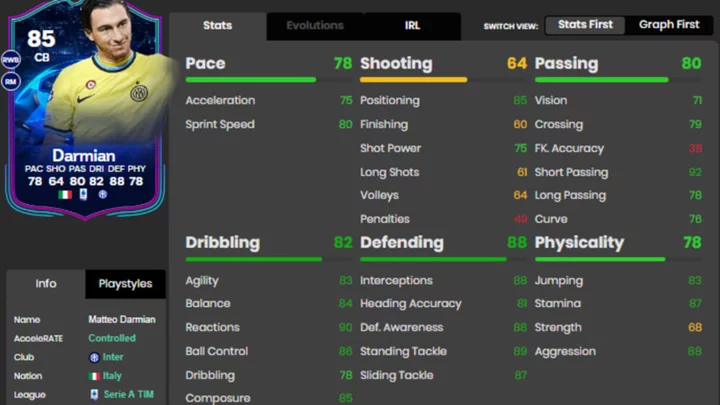

Matteo Darmain FC 24 Challenges: How to Complete the Road to the Knockouts Objective

Matteo Darmian FC 24 challenges detailed for the Road to the Knockouts objective set. Here's how to complete each objective.

2023-10-07 01:50

EU Warns Twitter Must Bolster Resources Ahead of Elections

Elon Musk’s Twitter needs to increase its resources if it wants to comply with new European regulations ahead

2023-06-23 09:45

Threads user count falls to new lows, highlighting retention challenges

Threads, Meta's Twitter rival, is struggling to retain users roughly a month after its highly publicized launch, according to fresh industry estimates showing that app engagement has fallen to new lows.

2023-08-04 01:19

How to Pre-load Forza Motorsport

Step-by-step details for pre-loading Forza Motorsport (2023) on every available platform including Xbox and PC.

2023-10-04 01:20

You Might Like...

Analysis-Southern Europe braces for climate change-fuelled summer of drought

It’s Early Days for the AI PC Growth Story

Webcash Global Launches Global Fund Management Solution ‘WeMBA’ in Vietnam

Google Working on 'Link Your Devices' Feature For Data and Call Sharing

Cavinder Twins: Dating life, family background and early career

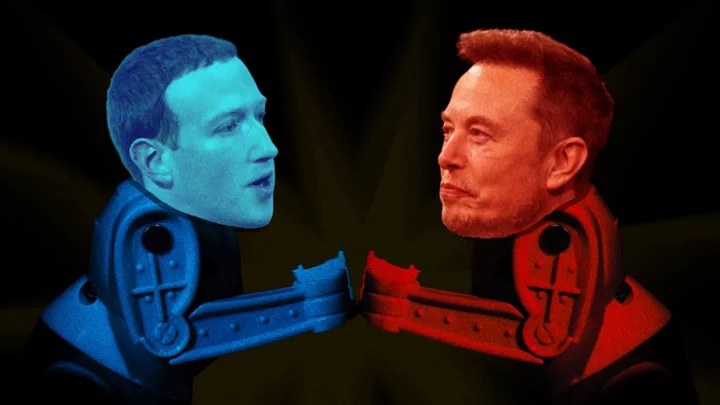

Elon Musk and Mark Zuckerberg still want to cage fight and livestream it

Amazon Echo Show 5 vs. Echo Show 8 (2nd gen): Which is right for you?

7 Dangerous and Deadly Toys From History