Lock in your iPhone 15's safety with Speck's ClickLock™ technology

The most highly anticipated day in tech, Apple’s fall event, has come and gone, and

2023-09-22 04:45

Celsius Network faces roadblocks in pivot to bitcoin mining

By Dietrich Knauth NEW YORK Crypto lender Celsius Network may have to seek a new creditor vote on

2023-12-01 03:16

How to Save in Diablo 4

Blizzard automatically saves your Diablo 4 in-game progress in real-time, so players never have to manually save their game.

2023-06-08 01:58

Ethereum Software Infrastructure Provider Flashbots Raises $60 Million

Flashbots, a provider of software used to package Ethereum blockchain transactions, raised $60 million to help finance the

2023-07-26 04:46

Apple launches Final Cut Pro and Logic Pro on iPad, finally bringing professional apps to tablets

Apple will bring Final Cut Pro and Logic Pro to the iPad, answering questions about the future of its high-powered tablets. The professional video and music editing apps have been remade for Apple’s tablets, with new touch interfaces and additional features added from the Mac counterpart. Apple has been making the iPad Pro for years, with the first released in 2015. Recent models have brought them in line with Apple’s laptops, using the same chips for faster performance. But at the same time, Apple has been relatively slow in adding professional apps to the platform that can make use of that computing capability. That had led some to wonder whether Apple was truly committed to its iPads being a professional platform. Now Apple has put its two main professional and creative apps onto the platform, and they will arrive later this month. “We’re excited to introduce Final Cut Pro and Logic Pro for iPad, allowing creators to unleash their creativity in new ways and in even more places,” said Bob Borchers, Apple’s vice president of worldwide product marketing, in a statement. “With a powerful set of intuitive tools designed for the portability, performance, and touch-first interface of iPad, Final Cut Pro and Logic Pro deliver the ultimate mobile studio.” The new versions of the app are largely similar to their Mac counterparts. They include the same basic design and similar functionality. The updates do however add some tools within the iPad version, such as a new sound browser in Logic Pro. And they also include new options that are built specifically for the tablet, such as support for the Apple Pencil. Customers will have to pay for the iPad versions of the app separately, even if they own the desktop one, with each app costing £4.99 per month or £49 per year. Final Cut Pro requires an M1 chip or later, and Logic Pro needs an A12 chip or later, and the apps must be updated to the latest operating system. Both of the apps will be available from 23 May. Apple’s announcement is unusual in that it comes just a month before its big software event, the Worldwide Developers Conference, which is held at the beginning of June. Apple usually announces new updates to its own apps at that event. Read More Apple announces shock results Tim Cook reveals his thoughts on AI – and Apple’s plans to use it Google gets rid of passwords in major new update

2023-05-09 21:59

UK to work with leading AI firms to ensure society benefits from the new technology

LONDON British Prime Minister Rishi Sunak and the bosses of leading AI companies OpenAI, Google DeepMind, and Anthropic

2023-05-25 04:18

Lightning eMotors Announces Production Launch of Next-Gen Lightning ZEV4™ Work Trucks

LOVELAND, Colo.--(BUSINESS WIRE)--Jun 15, 2023--

2023-06-15 20:18

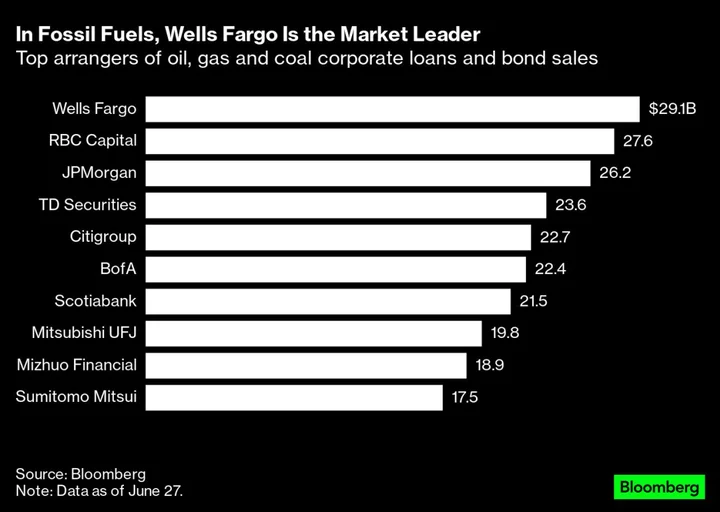

Green Bonds Take Big Lead Over Fossil-Fuel Debt Deals

For the first time, companies and governments are raising considerably more money in the debt markets for environmentally

2023-07-05 18:57

CommentSold Announces Live-Selling Strategic Partnership With TikTok

LOS ANGELES--(BUSINESS WIRE)--Aug 17, 2023--

2023-08-17 21:17

South Africa Declares National Disasters After Fall Floods, Storms

South Africa classified floods and storms that hit three coastal provinces in September and October as national disasters,

2023-11-08 18:18

Netflix shielded from Hollywood strike by global crew, strong pipeline

By Samrhitha A Netflix investors will assess risks from the ongoing strike in Hollywood when the company reports

2023-07-17 18:16

Keep your hands free with this $38 mini body cam

TL;DR: As of June 19, get the Mini Body Camera Video Recorder for just $37.99

2023-06-19 17:56

You Might Like...

Social media firms should reimburse online purchase scam victims – Barclays

Store your summer memories in 10TB of cloud storage

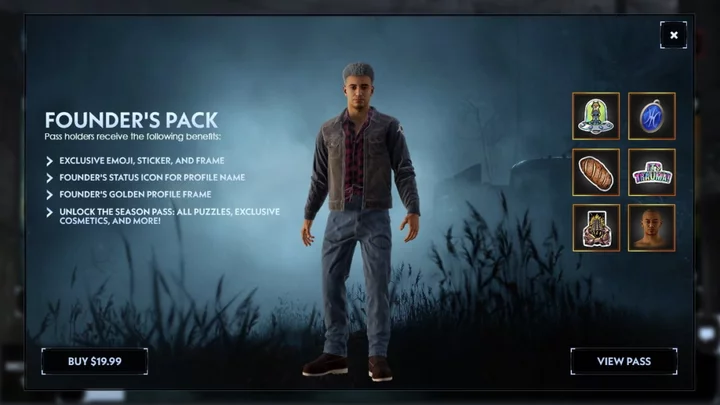

Silent Hill: Ascension Falls Flat With Glib Approach to Trauma, Microtransactions

Mastercard, Binance to end crypto card partnership

Hunter Fan Company Simplifies Home Improvement with HunterExpress®

Only 10 per cent of people on Earth can find the hidden objects in these four puzzles

Fed warned Goldman's fintech unit on risk, compliance oversight -FT

Valve is now selling refurbished Steam Decks for over $100 off